Feedforward Neural Network

Whether you're a seasoned data scientist or a curious learner, understanding the forward function could be a game-changer in how you perceive and interact with AI technologies.

In an era where artificial intelligence (AI) and machine learning are not just buzzwords but pivotal elements driving innovation across industries, understanding the core mechanisms that fuel these technologies is crucial. Did you know that at the heart of many AI models lies a concept known as the "forward function" in neural networks? This fundamental process, often overshadowed by the complex jargon of data science, plays a critical role in how neural networks learn and make predictions. For professionals and enthusiasts alike, grasping the intricacies of the forward function can unlock new levels of comprehension regarding how neural networks operate and their applications in the real world.

Whether you're a seasoned data scientist or a curious learner, understanding the forward function could be a game-changer in how you perceive and interact with AI technologies.

What is Forward Function in Neural Networks?

The forward function in neural networks, also known as forward propagation, stands as a cornerstone in the realm of artificial intelligence and machine learning. It embodies the process through which input data is transformed into a meaningful output, a journey that involves several critical steps:

Defining Forward Function: At its core, the forward function represents the pathway through which data flows from the input layer, through any hidden layers, to the output layer in a neural network. This process is integral for the network to generate predictions or classifications based on the input it receives.

Operational Mechanics: The journey begins with the input data being fed into the network. As it progresses through each layer, the data undergoes transformations via weighted connections and biases, with the assistance of activation functions. These functions are pivotal in introducing non-linear properties to the network, enabling it to learn and model complex relationships.

Significance and Efficiency: The efficiency and accuracy of neural network predictions heavily rely on the forward function. It ensures that the network can generalize from the training data to make accurate predictions on new, unseen data, showcasing its indispensable role in the learning process.

Feedforward vs. Recurrent Neural Networks: While the forward function is a common feature across various neural network architectures, its implementation in feedforward neural networks is distinguished by the unidirectional flow of data. This contrasts with recurrent neural networks, where data can travel in loops, allowing past outputs to influence current decisions.

Mathematical Underpinnings: The mathematical operations underlying forward propagation (for contrast, see Backpropagation), such as vector multiplication and the adjustment of weights and biases, are fundamental. These operations ensure that the network can accurately modulate input signals to produce the desired output.

Versatility in Applications: The forward function's versatility shines across different neural network architectures, demonstrating its adaptability and critical role in operations ranging from simple pattern recognition to complex decision-making processes.

In essence, the forward function not only powers the neural network's ability to learn from data but also highlights the delicate balance between mathematical principles and practical applications that define the field of artificial intelligence.

What is a Feedforward Neural Network?

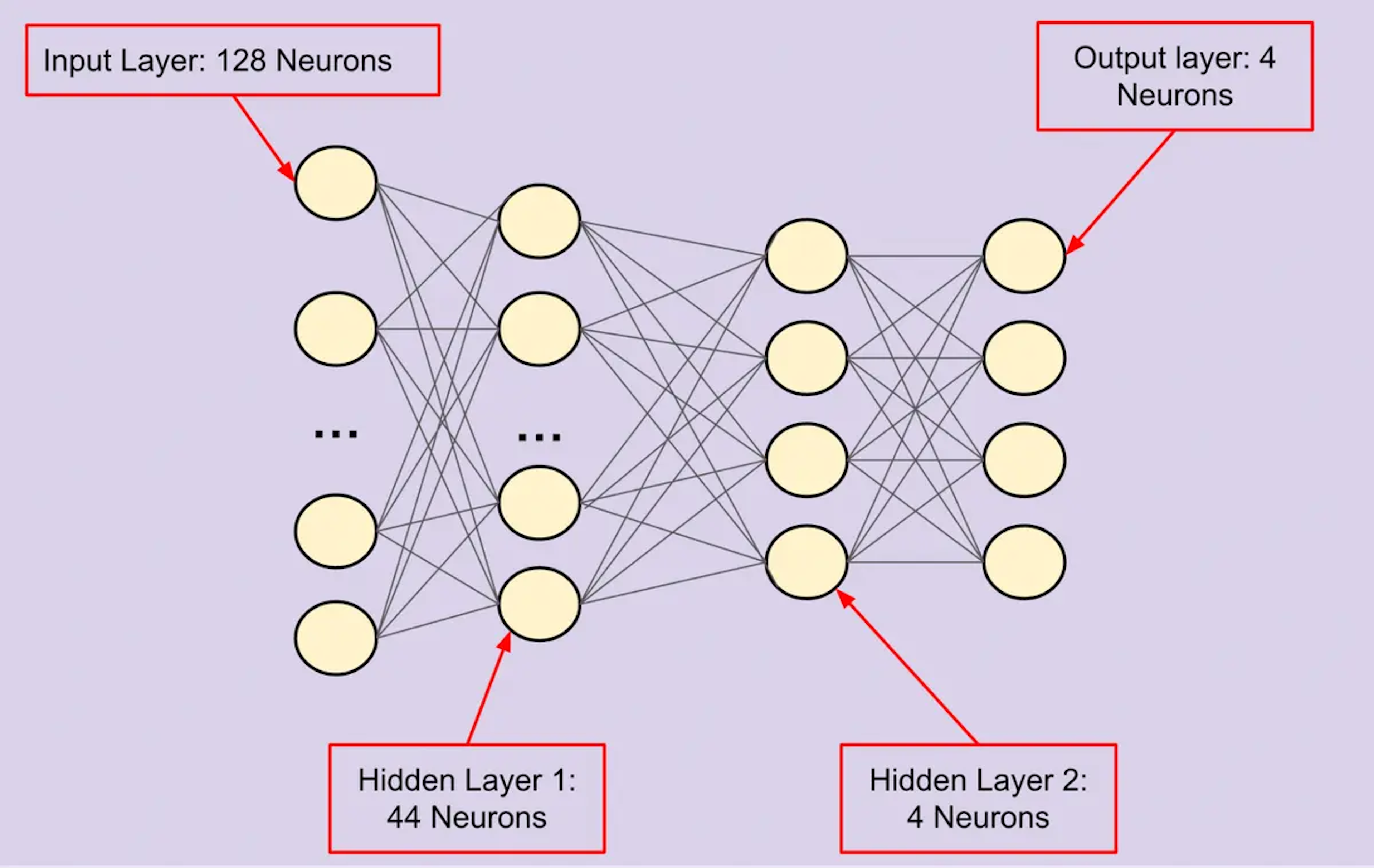

Feedforward Neural Networks (FNNs) present a straightforward yet powerful architecture within the vast universe of artificial intelligence and machine learning. These networks, characterized by their simplicity and directness, stand as the backbone of numerous modern AI applications. Here, we delve into the intricacies of FNNs, exploring their structure, historical significance, mathematical backbone, and real-world applications.

Architecture of FNNs

Direction of Data Flow: Unidirectional, from input to output layers.

Layer Structure: Comprises three primary layers - the input layer, one or more hidden layers, and the output layer.

Functionality: Each layer is designed to perform specific transformations on the data, gradually leading to the desired output.

Absence of Cycles: Unlike recurrent neural networks, FNNs do not have loops, ensuring a straightforward flow of data.

Historical Context and Development

Foundational Role: FNNs have been pivotal in the evolution of neural networks, serving as one of the earliest models to be developed.

Advancements: Over the years, enhancements in computational power and algorithmic efficiency have propelled FNNs to tackle more complex problems.

Significance: Their development marked the beginning of neural networks' journey from theoretical constructs to practical, impactful tools.

Mathematical Models and Algorithms

Backpropagation: A key algorithm that allows FNNs to learn from data by adjusting weights to minimize error.

Gradient Descent: Utilized alongside backpropagation to optimize the network's performance.

Activation Functions: Functions like ReLU and Sigmoid introduce non-linearity, enabling FNNs to model complex relationships.

Significance of Activation Functions

Enable Non-linearity: These functions allow FNNs to learn and model non-linear relationships within the data.

Variety: Common functions include ReLU, Sigmoid, and Tanh, each with distinct characteristics and applications.

Role in Learning: Activation functions are critical for deep learning, as they help in distinguishing between inputs that are relevant to the task at hand.

For more on activation functions, click here.

Real-World Applications

Pattern Recognition: FNNs excel in identifying patterns within data, a fundamental task in various fields such as security and healthcare.

Classification Tasks: From image classification to sentiment analysis, FNNs provide the computational mechanism for categorizing data into distinct classes.

Innovative Implementations: Beyond traditional applications, FNNs contribute to solving complex problems like stock market prediction and autonomous vehicle navigation.

Feedforward Neural Networks, with their unidirectional flow of data, absence of cycles, and reliance on backpropagation and activation functions, form a crucial component of the AI and machine learning landscape. Their ability to model complex relationships through a relatively simple architecture has made them indispensable in both historical and contemporary contexts. From the foundational development to cutting-edge applications in pattern recognition and classification, FNNs demonstrate the versatility and robustness of neural network models, underscoring their significance in driving forward the frontiers of artificial intelligence.

How Feedforward Neural Networks Work

Delving into the operational mechanics of Feedforward Neural Networks (FNNs) unveils a fascinating journey of data transformation, from input to the eventual output. This exploration sheds light on the intricate processes that enable these networks to perform tasks ranging from simple classifications to complex pattern recognitions.

The Initial Input Layer

Data Entry Point: The input layer serves as the gateway for data into the network, where each neuron represents a feature of the input vector.

Normalization: Often, input data undergoes normalization to ensure that it fits within a scale conducive to neural network processing.

Role: This layer doesn't perform any computation; instead, it distributes data to the hidden layers where the actual processing begins.

Weighted Inputs and Activation Functions

Summation Process: In hidden layers, each neuron receives weighted inputs from the preceding layer's neurons. The weights represent the strength or importance of the input signals.

Activation Function: After summing the weighted inputs, an activation function is applied. This step introduces non-linearity, allowing the network to learn and model complex patterns.

Examples: Common activation functions include ReLU for hidden layers and softmax for the output layer in classification tasks.

Layer-wise Processing

Sequential Data Transformation: Each layer's output serves as the input for the next, creating a chain of transformations that refine the data at each step.

Information Distillation: Through this layer-wise processing, the network distills the input data, extracting and emphasizing features relevant to the task at hand.

The Output Layer

Final Transformation: The output layer converts the processed data from the hidden layers into a format suitable for the specific task, such as a probability distribution in classification tasks.

Decision Making: This layer's activation function often differs from those in hidden layers, tailored to produce the final prediction or classification result.

The Backpropagation Algorithm

Learning Mechanism: Backpropagation is the heart of learning in FNNs, adjusting weights based on the error between the predicted and actual outputs.

Error Gradient Descent: It employs gradient descent to minimize error, iteratively nudging weights in the direction that reduces the overall prediction error.

Learning Rate and Hyperparameters

Learning Rate: This critical hyperparameter controls the step size during weight adjustment, balancing speed against the risk of overshooting the minimum error.

Tuning Hyperparameters: Besides the learning rate, other hyperparameters like the number of layers and neurons per layer greatly influence the network's performance and accuracy.

Challenges and Limitations

Overfitting: A common challenge where the network learns the training data too well, including its noise, leading to poor generalization to new data.

Vanishing Gradient Problem: In deep networks, gradients can become exceedingly small during backpropagation, severely slowing down learning or halting it altogether.

Feedforward Neural Networks, with their structured and layer-wise approach to processing data, embody a powerful method for tackling diverse computational tasks. From the initial input to the nuanced adjustments of backpropagation, each step in an FNN's operation contributes to its ability to discern patterns and make predictions. Despite facing challenges like overfitting and the vanishing gradient problem, advancements in network design and training methodologies continue to harness the potential of FNNs, pushing the boundaries of what artificial intelligence can achieve.

Applications of Feedforward Neural Network

Feedforward Neural Networks (FNNs) have made an indelible mark across a broad spectrum of industries, revolutionizing how data is interpreted, patterns are recognized, and decisions are made. These applications showcase the versatility and efficiency of FNNs in tackling complex challenges.

Image and Speech Recognition

Complex Pattern Recognition: FNNs excel in identifying intricate patterns within vast datasets, making them ideal for image and speech recognition tasks.

Accuracy and Efficiency: Their ability to classify images and interpret speech with high accuracy has led to significant advancements in automated customer service, security systems, and user interface accessibility.

For more on speech recognition, click here.

Financial Forecasting

Stock Market Predictions: By analyzing historical market data, FNNs can predict stock price trends, offering valuable insights for investors and traders.

Credit Risk Assessment: Financial institutions employ FNNs to evaluate the creditworthiness of borrowers, enhancing the accuracy of risk management strategies.

Medical Diagnosis

Disease Identification: FNNs are increasingly used in radiology to detect diseases from medical images, such as X-rays and MRIs, with remarkable precision.

Patient Data Analysis: Analyzing structured patient data, FNNs assist in diagnosing conditions early, improving patient outcomes.

Natural Language Processing (NLP)

Sentiment Analysis: FNNs analyze text to determine the sentiment behind it, benefiting marketing strategies and customer service by understanding consumer emotions.

Text Classification: In academia and industry, FNNs categorize text into predefined classes, streamlining the process of sorting through large volumes of documents.

Robotics

Navigation and Object Detection: Robots equipped with FNNs can navigate complex environments and identify objects, enhancing their autonomy and utility in tasks ranging from industrial manufacturing to domestic chores.

Decision-Making Processes: In critical applications, such as surgical robots and autonomous vehicles, FNNs contribute to the decision-making process, ensuring precision and safety.

Emerging Fields

Bioinformatics: In the quest to understand biological data, FNNs play a crucial role in gene sequence analysis and the modeling of biological processes.

Environmental Modeling: FNNs assist in predicting climate patterns and assessing environmental changes, supporting efforts in conservation and disaster preparedness.

The applications of Feedforward Neural Networks illuminate the transformative potential of this technology across various domains. From enhancing the accuracy of medical diagnoses to enabling the autonomy of robots, FNNs continue to push the boundaries of what artificial intelligence can achieve, marking an era of innovation and discovery.

Examples of Feedforward Neural Networks

Feedforward Neural Networks (FNNs) stand at the forefront of technological innovation, transforming a multitude of industries through their capacity to learn from data and improve over time. Below are several case studies and examples where FNNs have been instrumental in solving complex problems, highlighting their diverse applications.

Handwriting Recognition in Postal Services

Digital Transformation: Postal services worldwide have adopted FNNs for digit recognition, effectively sorting millions of mail pieces daily.

Enhanced Efficiency: This application not only speeds up the sorting process but also reduces human error, ensuring that your mail reaches its intended destination faster and more reliably.

Customer Sentiment Analysis for Businesses

Understanding Consumer Emotions: Businesses leverage FNNs to sift through customer feedback on social media and review platforms, gaining insights into public sentiment.

Strategic Decision-Making: This analysis guides product development, marketing strategies, and customer service improvements, directly impacting company growth and customer satisfaction.

Autonomous Vehicle Technology

Improving Road Safety: FNNs play a critical role in object detection and traffic sign recognition, essential technologies for autonomous vehicles.

Enhanced Navigation: By accurately identifying and interpreting road signs and obstacles, FNNs contribute to safer, more efficient autonomous driving experiences.

Game AI Development

Simulating Human Opponents: FNNs have mastered complex board games, offering players challenging and unpredictable opponents.

Learning from Gameplay: Through continuous learning, these neural networks adapt and evolve, providing a dynamic gaming experience that mimics human strategy and unpredictability.

Predictive Maintenance in Industrial Settings

Forecasting Equipment Failures: Industries employing FNNs for predictive maintenance can anticipate equipment malfunctions before they occur.

Cost Reduction and Efficiency: This foresight minimizes downtime and maintenance costs, significantly enhancing operational efficiency and productivity.

Smart Home Systems

Energy Management: FNNs optimize energy consumption in smart homes by learning patterns of usage and adjusting controls accordingly.

Security Monitoring: Advanced detection algorithms powered by FNNs enhance home security systems, identifying potential threats with remarkable accuracy.

These examples underscore the versatility and efficiency of Feedforward Neural Networks in parsing complex data sets, learning from them, and making informed predictions or decisions. From enhancing the speed and reliability of postal services to ensuring safer autonomous driving and smarter homes, FNNs continue to drive innovation across various fields. Their application in predictive maintenance showcases their potential to save costs and prevent industrial setbacks, while their role in gaming AI demonstrates their ability to mimic human-like decision-making processes, providing both entertainment and a platform for advanced AI research. As these technologies evolve, the future applications of FNNs are boundless, promising even more sophisticated solutions to the world's most intricate challenges.

Feedforward Vs. Deep Neural Networks

The landscape of artificial intelligence is a battleground of evolving architectures, where feedforward neural networks (FNNs) and deep neural networks (DNNs) present two contrasting, yet complementary, paradigms. Understanding their distinctions, applications, and limitations provides insight into the current and future trajectory of neural network research.

Defining Deep Neural Networks (DNNs)

DNNs are a subclass of artificial neural networks characterized by their depth; that is, the number of hidden layers between the input and output layers. Unlike traditional feedforward neural networks, which might have a single hidden layer or even none, DNNs can have dozens or hundreds, enabling them to capture complex patterns in high-dimensional data.

Complex Pattern Recognition: DNNs excel in tasks requiring the recognition of intricate patterns within data, a capability attributed to their deep architecture.

Hierarchical Feature Learning: These networks learn progressively higher-level features at each layer, a process that mimics the cognitive function of the human brain.

Depth in Neural Networks

The concept of depth in neural networks is foundational to understanding the distinction between FNNs and DNNs. Depth refers to the number of layers through which data passes, from input to output.

Impact on Learning: Additional layers allow DNNs to perform more abstract forms of computation, enabling the extraction of nuanced features that simpler models might miss.

Complexity vs. Clarity: While deeper networks can model complex relationships, they also introduce challenges in training, including the notorious vanishing gradient problem.

Advantages of Deep Neural Networks

The capabilities of DNNs extend far beyond those of their shallower counterparts, especially for tasks involving large and complex datasets.

Handling High-Dimensional Data: DNNs thrive in environments with vast amounts of data, making them ideal for image and speech recognition tasks.

Superior Performance: In fields like computer vision and natural language processing, DNNs have consistently outperformed traditional models, setting new standards for accuracy and efficiency.

Challenges in Training DNNs

Despite their advantages, training DNNs is not without its challenges, primarily due to their depth and complexity.

Computational Complexity: The training of DNNs demands significant computational resources, often necessitating the use of specialized hardware like GPUs.

Large Datasets Required: The effectiveness of a DNN correlates with the size of the dataset on which it is trained, requiring substantial amounts of data to perform optimally.

From Feedforward to Deep Neural Networks

The evolution from FNNs to DNNs, including the development of convolutional neural networks (CNNs), marks a significant milestone in the field of artificial intelligence.

Breakthroughs in Computer Vision: CNNs, a type of DNN, have revolutionized computer vision, enabling applications from facial recognition to autonomous vehicle navigation.

Influence on Research: The success of DNNs, particularly CNNs, has shifted the focus of neural network research towards exploring deeper and more complex architectures.

Ongoing Debate and Future Trajectory

The debate between the utility of FNNs versus the potential of DNNs is ongoing, with each architecture offering unique advantages.

Limitations of FNNs: While simpler and easier to train, FNNs struggle with complex pattern recognition, a limitation that DNNs overcome with their depth.

Potential of Deep Learning Models: The advancements in DNNs, driven by deep learning, suggest a future where AI systems can learn and reason with a degree of sophistication approaching human-like cognition.

The exploration of neural networks, whether feedforward or deep, is a journey towards understanding intelligence itself. As researchers continue to push the boundaries of what these architectures can achieve, the future of neural network research appears both promising and boundless. The convergence of theoretical breakthroughs and practical applications promises to usher in a new era of AI, one that is likely to redefine the landscape of technology and its role in society.