Graph Neural Networks

This article discusses GNNs, decoding their essence and unveiling their transformative potential across various domains.

Ever wondered how social platforms manage to suggest friends with uncanny accuracy or how researchers predict the properties of molecules for drug discovery? The secret lies in GNNs, a sophisticated architecture designed to navigate the complexities of graph-structured data.

This article discusses GNNs, decoding their essence and unveiling their transformative potential across various domains. From the core principles that set them apart from traditional neural networks to their pivotal role in advancing AI and machine learning, we embark on a journey to explore the mathematical genius and practical applications of GNNs.

What are Graph Neural Networks

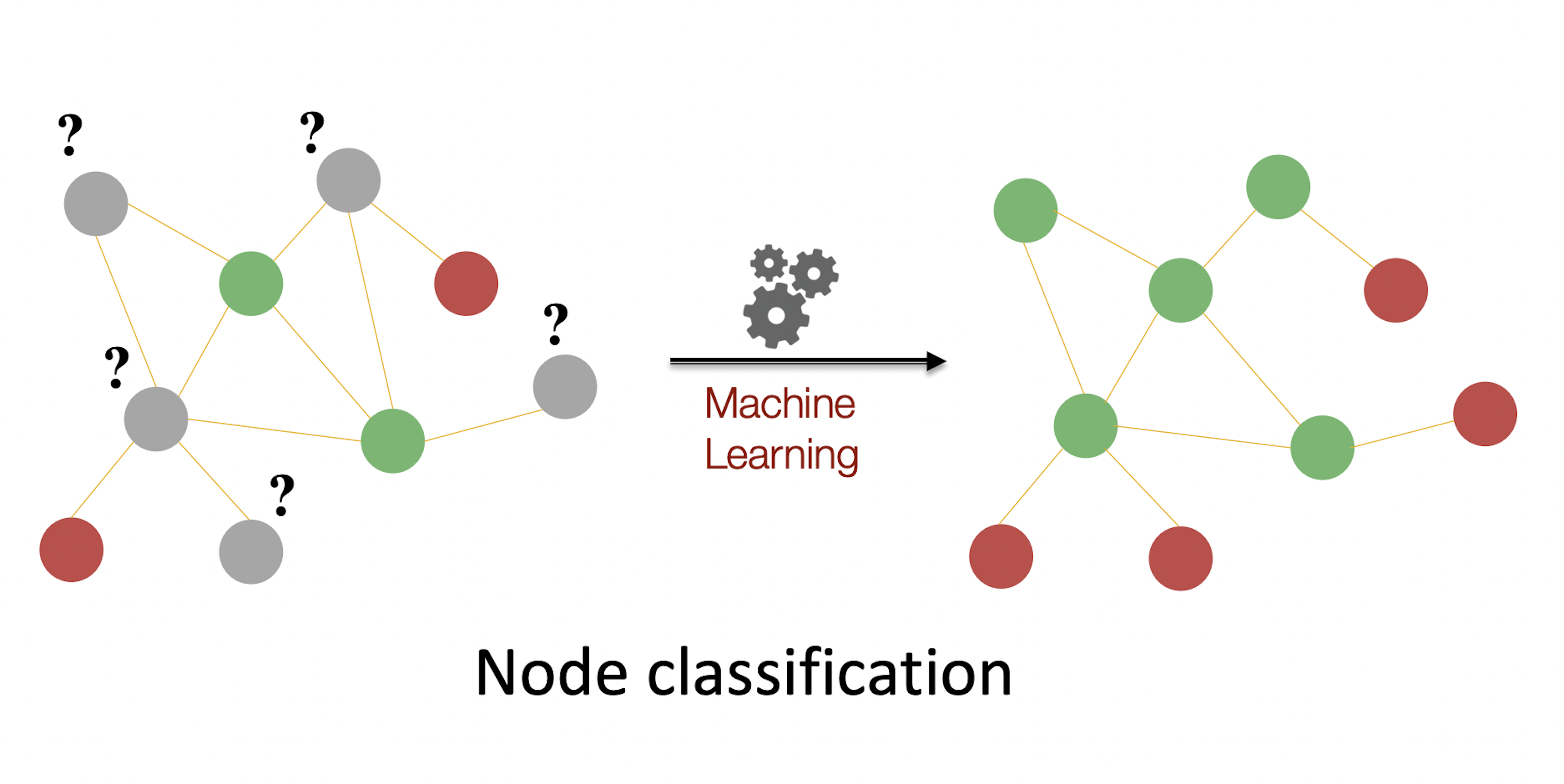

Graph Neural Networks (GNNs) represent a leap in neural network architecture, tailored for the unique challenges posed by graph-structured data. At their core, GNNs excel in capturing the intricate dependencies between nodes within a graph — a feature distinguishing them starkly from their neural network counterparts. This ability stems from their design, which takes into account the rich information present in nodes, edges, and their interconnections. According to Analytics Vidhya, GNNs shine in tasks such as node classification, link prediction, and graph clustering, showcasing their versatility and power.

Graph-structured data, abundant in social networks, molecular structures, and recommendation systems, encapsulates entities (nodes) and relationships (edges) in a way that mirrors real-world complexity. The evolution of GNNs traces back to early attempts at processing such data, evolving rapidly to become a cornerstone of modern AI and machine learning endeavors. This evolution highlights the growing recognition of interconnected data's importance and the need for specialized tools to analyze it effectively.

Central to GNNs' functionality is the mathematical framework of message passing between nodes. This process involves nodes aggregating information from their neighbors, allowing the network to learn and update node states iteratively. This unique mechanism enables GNNs to leverage node and edge information to make accurate predictions and analyses across diverse applications, embodying their potential to redefine our understanding of complex systems.

Node classification illustration (Source: Stanford CS 224W)

How Graph Neural Networks Work

Understanding the operational dynamics of Graph Neural Networks (GNNs) requires a dive into the foundational principles of graph theory and its application to organizing complex datasets. GNNs stand as a testament to the power of leveraging these principles to decipher the intricacies of data structured as graphs.

The Importance of Graph Theory

Graph Theory in Data Organization: At the heart of GNNs lies graph theory, a mathematical scaffold that provides a systematic way of representing relationships within data. The principles of graph theory guide the organization of datasets into a collection of nodes (entities) and edges (relationships), offering a mirror to real-world structures.

Application by GNNs: GNNs harness these principles to process and analyze data in a way that traditional neural networks cannot. By understanding the interconnected nature of data points, GNNs introduce a level of analysis depth that unveils patterns and relationships hidden within the graph.

The Iterative Process of Message Passing

Node State Updates: The unique feature of GNNs is their use of a message-passing mechanism, where nodes gather and aggregate information from their neighboring nodes. This iterative process allows each node to update its state based on the collective information received, leading to a more refined understanding of the graph's structure.

Influence of Node and Edge Features: Both node features (attributes of the entities) and edge features (attributes of the relationships) play a crucial role in this process. They influence how information is passed and aggregated across the network, directly impacting the accuracy of the GNN's outputs.

Learning in GNNs

Adjustment of Weights: Learning within GNNs revolves around the adjustment of weights during training to minimize the gap between predicted outcomes and actual results. This process is akin to tuning the network to better align with the underlying patterns and relationships in the graph data.

Role of Aggregation Functions: Various aggregation functions determine how information from neighbors is combined at each node. These functions significantly affect the network's performance, as they shape the way information is synthesized and interpreted by the GNN.

Hyperparameters' Impact: Learning rate and other hyperparameters are pivotal in steering the learning process. They dictate the speed and direction of learning, ensuring that the network optimally adjusts its weights in response to the data it processes.

Handling of Directed and Undirected Graphs

Directed vs. Undirected Graphs: GNNs distinguish between directed and undirected graphs, adapting their processing accordingly. In directed graphs, relationships have a direction (from one node to another), while undirected graphs feature bi-directional or non-directional relationships.

Implications for Applications: This distinction has profound implications for practical applications. For instance, in social network analysis, directed edges might represent one-way followership, while undirected edges could denote mutual friendships. Understanding this difference enables GNNs to accurately model and analyze the dynamics of complex networks.

By demystifying the workings of GNNs through a focus on graph theory, message passing, learning mechanisms, and the handling of graph types, we uncover the layers of sophistication that make these networks so powerful. GNNs represent a significant advancement in our ability to interpret and leverage graph-structured data, promising new frontiers in AI and machine learning research and applications.

Types of Graph Neural Networks

Graph Neural Networks (GNNs) have evolved into a sophisticated tool for handling graph-structured data, thanks to their versatility and adaptability in processing complex relationships and dependencies. The diversification within GNN architectures tailors them for specific data types and problem-solving scenarios. Data scientists at CRED, as featured in the Analytics India Magazine, have outlined several main variants of GNNs, each equipped to tackle unique challenges in the realm of graph data analysis.

Convolutional Graph Neural Networks (GCNs)

Inspiration and Mechanism: Drawing inspiration from the success of Convolutional Neural Networks (CNNs) in image processing, GCNs adapt the convolution operation to graph data. This adaptation allows GCNs to operate on data points (nodes) with their context (edges), enabling them to understand the local node neighborhoods effectively.

Applications: Primarily, GCNs excel in node classification tasks where each node's label depends on its neighbors. For instance, in social networks, GCNs can identify the role of individuals based on their connections.

Graph Attention Networks (GATs)

Introduction of an Attention Mechanism: GATs represent a leap forward by integrating an attention mechanism that dynamically assigns importance to nodes' neighbors. This mechanism allows the network to focus on more relevant information in the neighborhood, enhancing the model's predictive power.

Benefits: The ability to weigh the neighbors' contributions makes GATs particularly useful in scenarios where not all connections in the network contribute equally to the output. This feature is invaluable in recommendation systems where the influence of neighboring users or items varies.

GraphSAGE

Innovative Approach to Node Embeddings: GraphSAGE stands out by introducing a method to learn node embeddings by sampling and aggregating features from a node's local neighborhood. This approach allows for generating low-dimensional representations of nodes, capturing both their features and their relational context.

Flexibility: The sampling technique enables GraphSAGE to efficiently handle large graphs by focusing on a subset of neighbors, making it scalable and adaptable to various sizes of graph data.

Recurrent Graph Neural Networks (R-GNNs)

Dynamic Graphs Handling: R-GNNs come into play in scenarios where the graph's structure changes over time. These networks are designed to capture the evolution of relationships, making them fit for analyzing temporal graphs.

Use Cases: They are particularly beneficial in areas like social media analysis, where the connections between users may evolve, or in transaction networks to monitor the creation and dissolution of links over time.

Autoencoder-based GNNs

Graph Reconstruction and Link Prediction: These GNN variants leverage the autoencoder architecture to reconstruct graph data or predict missing links. By learning efficient representations, they can infer the existence of connections between nodes, even in the absence of direct links.

Advantages: Autoencoder-based GNNs are instrumental in uncovering hidden patterns in the graph structure, such as predicting potential friendships in a social network or identifying likely interactions in a protein-interaction network.

Each of these GNN variants brings a unique set of tools to the table, addressing specific aspects of graph-structured data analysis. From understanding the static structure of graphs with GCNs and GATs to adapting to dynamic changes with R-GNNs, and even reconstructing and predicting links with Autoencoder-based GNNs, the landscape of graph neural networks is rich and varied. This diversity underscores the field's capacity for innovation, ensuring that as our data grows more interconnected, our methods for analyzing it become increasingly sophisticated.

Applications of Graph Neural Networks

Graph Neural Networks (GNNs) are reshaping how we understand interconnected data, offering innovative solutions across diverse domains. From social networks to quantum computing, GNNs are unlocking new possibilities for analysis and prediction by leveraging the intricate structures of graph data. Let's delve into the multifaceted applications of GNNs that highlight their transformative potential.

Social Network Analysis

Community Detection: GNNs excel in identifying and grouping similar nodes within larger networks, facilitating the discovery of communities or clusters. This capability is instrumental in social networks, where understanding group dynamics can lead to enhanced user engagement strategies.

Influence Estimation: By analyzing the interactions and connections within a network, GNNs can estimate the influence of particular nodes. This insight is crucial for identifying key influencers and understanding their impact on social media trends and information dissemination.

Recommendation Systems

Enhanced Accuracy: GNNs consider the complex relationships between users and products to generate personalized recommendations. By capturing the nuanced preferences and interactions within the network, GNNs achieve a higher level of accuracy than traditional recommendation engines.

Dynamic Suggestions: As relationships and preferences evolve, GNNs dynamically update recommendations, ensuring they remain relevant and engaging for users. This adaptability is key to maintaining user interest over time.

Drug Discovery

Molecular Interaction Prediction: GNNs can predict how molecules interact, which is essential for identifying potential drug candidates and understanding their mechanisms of action. This application has the potential to accelerate the pace of drug discovery significantly.

Property Prediction: Beyond interactions, GNNs can also predict the properties of molecules, such as solubility and toxicity. This capability aids in the early identification of promising compounds, streamlining the drug development process.

Fraud Detection Systems

Pattern Recognition: GNNs identify unusual patterns in transaction networks, helping to detect fraudulent activity. By analyzing the relationships between transactions, GNNs can uncover subtle anomalies that might elude traditional detection methods.

Dynamic Analysis: The ability of GNNs to adapt to new data means that fraud detection systems can continuously evolve, staying one step ahead of sophisticated fraud techniques.

Traffic Prediction and Management

Road Network Utilization: Leveraging the graph structure of road networks, GNNs predict traffic flow and congestion, enabling more efficient traffic management.

Real-time Adjustments: GNNs facilitate dynamic routing and congestion mitigation strategies by processing real-time data, leading to smoother traffic flow and reduced travel times.

Quantum Computing

Graph Structured Data Sets: The emerging application of GNNs in quantum computing, as explored by Pasqal, involves handling graph-structured data sets. This application represents a novel intersection of quantum mechanics and graph theory, opening up new avenues for research and development.

Potential for Breakthroughs: The integration of GNNs with quantum computing holds the promise of solving complex problems faster and more efficiently than ever before, showcasing the vast potential of GNNs to revolutionize various industries.

The versatility of GNNs is evident in their wide-ranging applications, from enhancing social media analytics to pioneering advancements in quantum computing. By providing deeper insights into interconnected data, GNNs stand at the forefront of a new era in data analysis and prediction, poised to transform industries with their unique capabilities.